Chapter 4: Testing Voice Applications

You may be an experienced Alexa developer or just studying the brand new field of creating multimodal voice applications for Alexa and Google Home. You may also be a company that is researching and evaluating the opportunities to place its brand in the voice-first search index. You could be a tester that has been giving the task of going through all the possible dialogue branches in the Alexa Skill or Google Action. No matter who you are - we all face the same problem - how to test our Skills and Actions?

In this chapter of our Voice Development Course for Beginners, you learn how to test the application you created in the previous chapters.

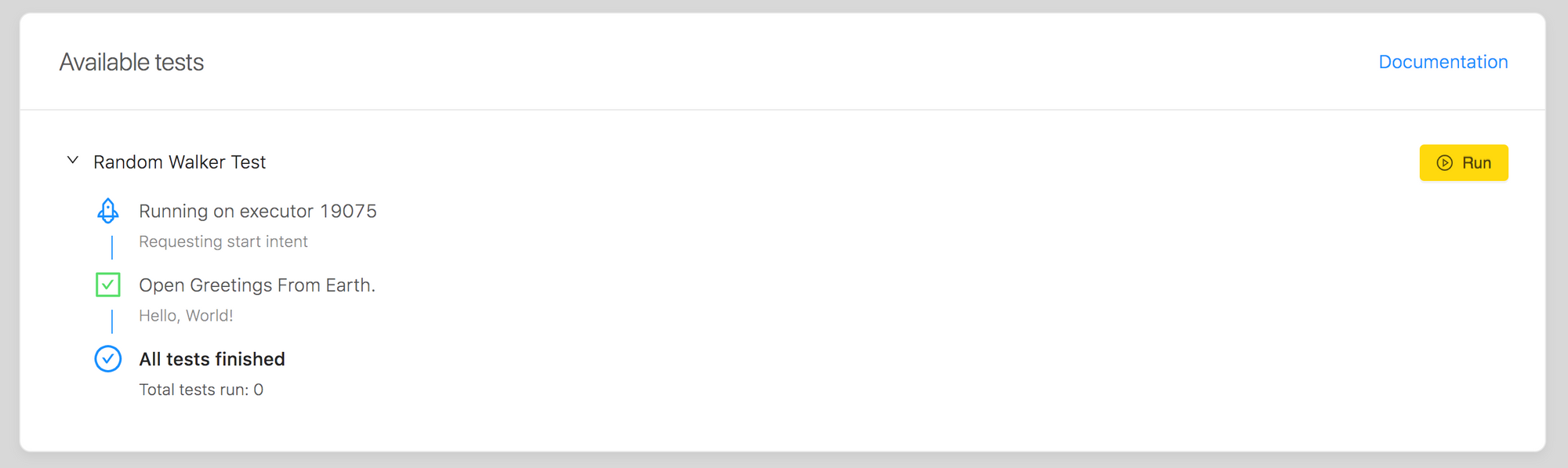

Running a Test

Hit the Save button in the BotTalk editor and head over to the Test tab at the top of the screen.

Be sure you have your volume turned on (and headphones on - if you’re reading this at your office). Because now you’re about to experience something special - how you make your Assistant talk.

Hit the Run button to start the Random Walker Test and enjoy!

BotTalk has the unique feature of executing audio scenario right in the browser. Meaning, you hear how your scenario sounds on the Alexa or inside of the Google Action. Hearing the actual dialog is very useful since the spoken word can be entirely different from the written script. Also, it’s a good idea to run the Random Walker Test to make sure that you didn’t break anything in your scenario. It’s your failsafe.

After you finish this course, you can learn how to write your custom voice tests. It is quite easy actually.

Where is my answer?

If you launch the Randow Walker Test it randomly chooses one of the three options:

- Cancel

- Stop

- Help

That doesn’t seem right. What we want to do is to greet the user AND ask her about her name:

Assistant: Welcome to Greetings from Earth. What is your name?

Where is the answer to this question?

Well, although you asked the User a question and even put a voice assistant in the “listening” mode with getInput, you still haven’t defined two critical things:

- What are you expecting your user to say?

- What would happen next - after she said it?

Next Steps

The next chapter teaches how to capture what the user has said by defining intents, utterances, and slots in BotTalk.