Chapter 1: Understand How Voice Assistants Work

How do voice assistants work? Users engage with voice assistants through back and forth conversations. It is very different from what we know from the desktop or mobile apps. This chapter of the introductory voice development course describes the whole process of how Amazon Alexa or Google Action interact with a user, understand commands and decide what to answer.

On this page

- [Demo] Sample Voice App

- [Infographic] The Architecture of Conversational Interfaces

- Key Terms

- [Infographic] Wake Word and Explicit Invocation

- [Infographic] Speech to Text and NLP

- [Infographic] Fulfillment WebHook / Web Service

- Voice Terms Glossary

- Next Steps

Voice App Demo

In the previous chapter of this course we’ve introduced you to the basic voice app you’re building called “Greetings from Earth”.

To remind you what this app is about, let’s take a listen of what our voice application will sound like when finished. To listen to the conversation between a user and a voice assistant, we use the BotTalk’s built-in voice applications automatic tester.

Remember to click the Start button in front of the Happy Path:

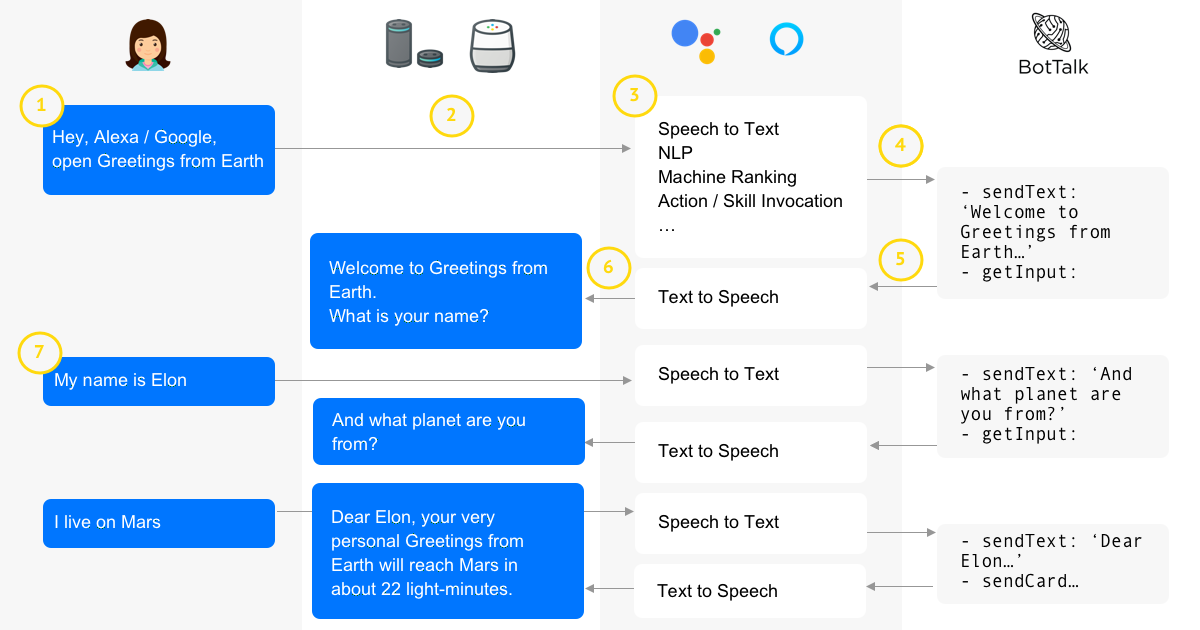

The Architecture of Conversational Interfaces

To visualize what happens behind the scenes, when the user interacts with a voice assistant, take a look at this infographic. You can rerun the voice app demo and follow the steps on the infographic to understand them better.

- A user talks to an assistant and requests the voice app Greetings from Earth to start

- An assistant sends the speech to Amazon’s / Google’s cloud

- The cloud analyses the speech: converts speech to text, performs Natural Language Processing (NLP) and decides which Google Action / Alexa Skill to open

- The cloud determines that the fulfillment endpoint of this Google Action / Alexa Skill is hosted by BotTalk and sends the results of the NLP to BotTalk

- BotTalk understands that the user asked to open the voice app Greetings from Earth and formulates a response. This response consists of a greeting and a question. BotTalk sends the response back to the Amazon’s / Google’s cloud

- The cloud converts text to speech which is then read by the user’s device: “Welcome to Greetings from Earth. What is your name?”

- A user answers the question - “My name is Elon”.

So the back-and-forth conversation goes on. Until the user decides to quit the voice app by saying “Stop”. Or when the app has fulfilled the user’s need - and the app quits by itself - wishing the user farewell.

Key Terms

When learning voice development, one of the most daunting tasks could be understanding the terminology. The fact that different platforms - Amazon Alexa and Google Actions - name the same concepts differently doesn’t help.

That is why for beginners in our voice application course, we decided to take a new approach. In this section we’ll introduce each of the terms as we go through the conversation flow we’ve designed earlier for our sample voice app “Greetings from Earth”.

After you understand the terms on the very basic conceptional level, you can look up their formal definitions at our voice terms glossary

Let’s examine the whole conversation that the infographic above describes step by step.

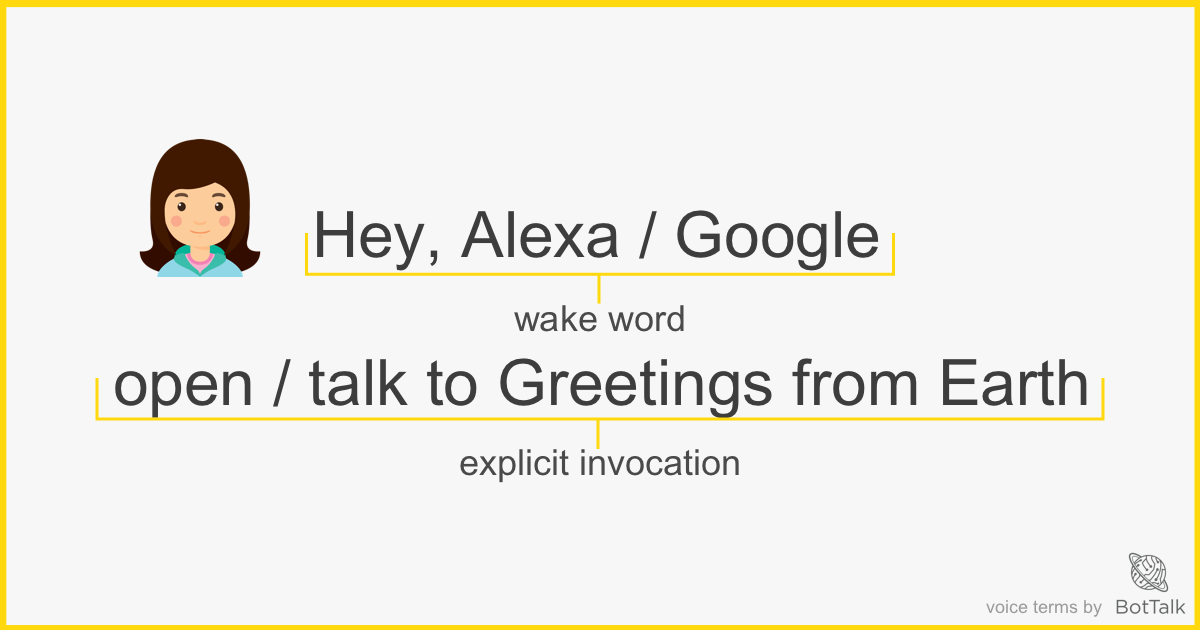

Wake Word and Explicit Invocation

To initiate a conversation a user says the following phrase:

User: Hey Alexa / Google, open / talk to Greetings from Earth

This phrase consists of two parts: wake word (the name of the assistant) and explicit invocation (the name of your Alexa Skill / Google Action):

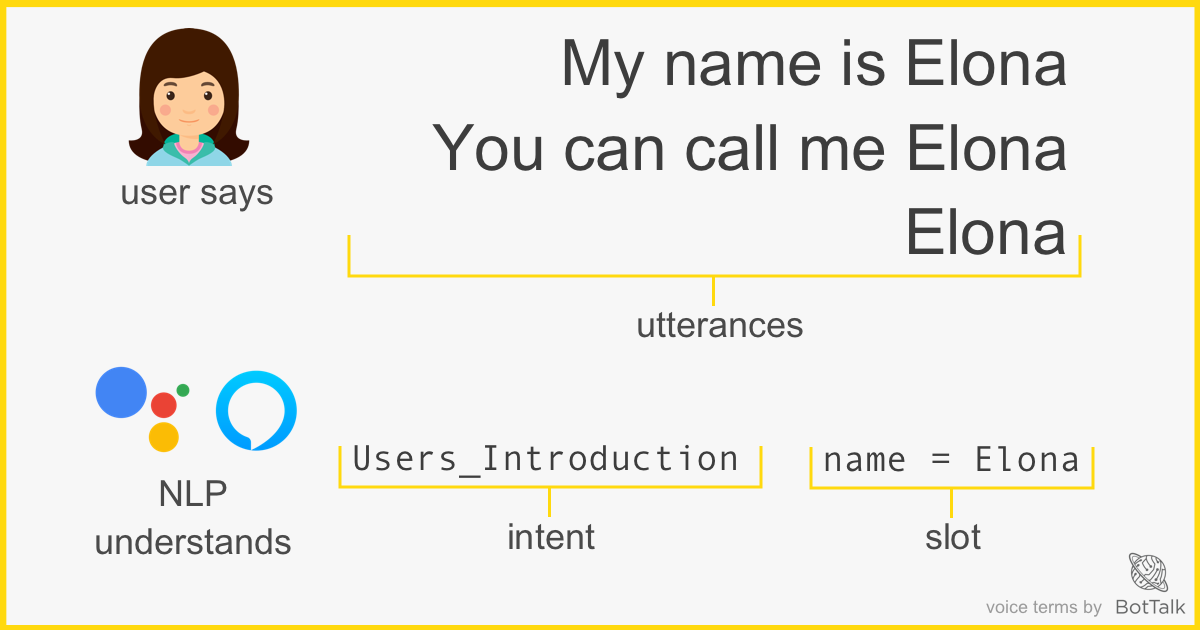

Speech to Text and NLP

As soon as a voice assistant hears the wake word, it captures everything that the user says after the wake word and sends it to the cloud.

The cloud performs several things. First, it converts speech to text, and then it analyses the text to figure out what the user wants.

The user’s desire is called intent. It’s your job as a voice developer to come up (guess) with different ways how a user may formulate this intent. Those different variants the user might say the same thing are called utterances. The more utterances you define, the likely it is that a voice assistant understands a user.

Consider the following conversation between an assistant and a user:

Assistant: Welcome to Greetings from Earth. What is your name?

User: My name is Elona

Natural Language Processing is analyzing different ways a human says something to translate it into something that computers can understand - one intent and one slot. This analysis is performed in Amazon’s and Google’s cloud and uses different techniques - Machine Learning Ranking - is one of them.

In the infographic above NLP analyzed the user’s phrase “My name is Elona” and was able to identify it as User_Introduction intent with the slot name filled with the value of “Elona”.

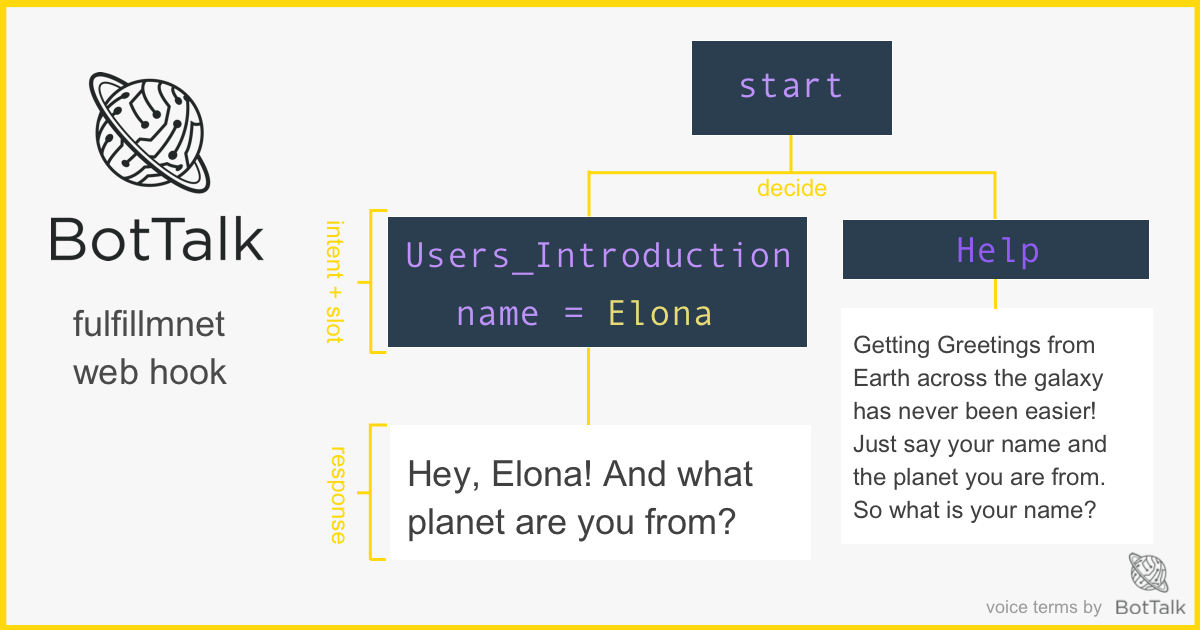

Fulfillment WebHook / Web Service

The Amazon / Google cloud should transfer the results of the NLP analysis somewhere. It’s your job as an Alexa Skill / Google Action developer to receive the intents and slots and build logic around that.

BotTalk makes this process very easy. It serves as a fulfillment webhook (also called web service) for your voice applications. You don’t have to have any servers or understand any code. All you have to do as a developer - is write conversational logic.

Meaning: you tell BotTalk what to do next depending on what intent you received from the user.

One of the big things that BotTalk helps you with - is maintaining the context. BotTalk automatically remembers what the user said the last time, so your voice app can have a more natural conversation - and not asking the same questions after the user relaunches it.

Voice Terms Glossary

Here are the most basic terms you need to understand 80% of the official documentation to build Alexa Skills and Google Actions.

If during this course you encounter a term you don’t undersand - just come back here and take a quick look.

- Wake word

- A command that the user says to tell Alexa / Google Assistant that she wants to talk to it. For example, "Alexa, open Greetings from Earth." Or, "Ok, Google, talk to Greetings from Earth". Here, "Alexa / Ok, Google" are the wake words. Alexa users can select from a defined set of wake words: "Alexa", "Amazon", "Echo", and "Computer".

- Invocation name

- A name that represents the custom Alexa Skill or Google Action the user wants to engage with. The customer says a supported phrase (utterance) in combination with the invocation name to begin interacting with the voice app.

- Explicit invocation

- Explicit invocation occurs when a user tells the Amazon Echo / Google Assistant to open your Skill / Action by name. Optionally, the user can include a particular intent at the end of their invocation that takes them directly to the function they're requesting.

- Implicit invocation

- Implicit invocation occurs when a user requests to perform some task without invoking a Skill / Action by name. The Amazon Echo / Google Assistant attempts to match the user's request to a suitable Skill / Action, then presents recommendations to the user.

- Text-to-Speech (TTS)

- TTS converts a string of text to synthesized speech (Alexa's / Assistant's voice). The Amazon / Google cloud can take the plain text for TTS conversion.

- NLP / NLU

- Natural Language Understanding / Processing (NLP) is the capability of software to understand and parse user input. NLU is handled by Dialoglow for Google Actions and by Alexa Service for Alexa Skills.

- Utterance

- The actual phrase the user says to a voice assistant to communicate what they want to do, or to provide a response to a question of a voice assistant.

- Intent

- A machine-readable representation of a goal or task that users want to accomplish. An intent can have one or several arguments called slots that stand for information that varies.

- Fulfillment

- A server that handles an intent and determines the logic. It sends back the instructions (response) what an assistant should respond to the user.

Next Steps

Now you have the grasp of the basic voice terminology! You can move on with creating a new voice scenario in BotTalk in the next chapter.